It’s paintbrushes at dawn as artists feel the pressure of AI-generated art

If you’ve been anywhere close to the interwebs recently, you’ll have heard of DALL-E and MidJourney. The types of art the neural networks can generate — and with a deeper understanding of the strengths and weaknesses of the tech — means that we are facing a whole new world of hurt. Often the butt of […]

If you’ve been anywhere close to the interwebs recently, you’ll have heard of DALL-E and MidJourney. The types of art the neural networks can generate — and with a deeper understanding of the strengths and weaknesses of the tech — means that we are facing a whole new world of hurt. Often the butt of tasteless jokes (How do you get a waiter’s attention? Call out “Hey, artist!?”), computer-generated art is another punchline in the “they took our jobs” narrative of human versus machine.

To me, the interesting part of this is that robots and machines taking certain jobs have been begrudgingly accepted, because the jobs are repetitive, boring, dangerous or just generally awful. Machines welding car chassis do a far better job, faster and safer, than humans ever could. Art, however, is another matter.

As with all technology, there’s going to be a time when you no longer trust your own eyes or ears; machines are going to learn and evolve at breakneck speed.

In the recent movie “Elvis,” Baz Luhrmann puts a quote in Colonel Tom Parker’s mouth, saying that a great act “gives the audience feelings they weren’t sure they should enjoy.” To me, that’s one of the greatest quotes I’ve heard about art in a while.

Commercial art is nothing new; whether your mind goes to Pixar movies, music or the prints that come with the frames in Ikea, art has been peddled at great scale for a long time. But what it, by and large, has in common, is that it was created by humans who had a creative vision of sorts.

The picture at the top of this article was generated using MidJourney, as I fed the algorithm a slightly ludicrous prompt: A man dances as if Prozac was a cloud of laughter. As someone who’s had a lifetime of mental health wobbles, including somewhat severe depression and anxiety, I was curious what a machine would come up with. And, my goodness; none of these generated graphics are something I’d have conceptually come up with myself. But, not gonna lie, they did something to me. I feel more graphically represented by these machine-generated works of art than almost anything else I’ve seen. And the wild thing is, I did that. These illustrations weren’t drawn or conceptualized by me. All I did was type a bizarre prompt into Discord, but these images wouldn’t have existed if it hadn’t been for my hare-brained idea. Not only did it come up with the image at the top of this article, it spat out four completely different — and oddly perfect — illustrations of a concept that’s hard to wrap my head around:

[gallery ids="2363670,2363669,2363671,2363661"]

It’s hard to put words to exactly what that means to conceptual illustrators around the world. When someone can, at the click of a button, generate artworks of anything, emulating any style, creating pretty much anything you can think of, in minutes — what does it mean to be an artist?

Over the past week or so, I may have gone a little overboard, generating hundreds and hundreds of images of Batman. Why Batman? I have no idea, but I wanted a theme to help me compare the various styles that MidJourney is able to create. If you really want to go deep down the rabbit hole, check out AI Dark Knight Rises on Twitter, where I’m sharing some of the best generated pieces I’ve come across. There are hundreds and hundreds of candidates, but here is a selection showing the breadth of styles available:

[gallery ids="2363784,2363785,2363789,2363792,2363781,2363779,2363776,2363782,2363775,2363763,2363764,2363765,2363766,2363767,2363768,2363769,2363770,2363771,2363772,2363773,2363774,2363777,2363778,2363780,2363783,2363786,2363787,2363788,2363790,2363791"]

Generating all of the above — and hundreds more — only had three bottlenecks: The amount of money I was willing to spend on my MidJourney subscription, the depth of creativity I could come up with for the prompts and the fact that I could only generate 10 concurrent designs.

Now, I have a visual mind, but there isn’t an artistic bone in my body. But I don’t need one. I come up with a prompt — for example, Batman and Dwight Schrute are in a fistfight — and the algo spits out four versions of something. From there, I can re-roll (i.e. generate four new images from the same prompt), render out a high-res version of one of the images or iterate based on one of the versions.

Batman and Dwight Schrute are in a fistfight. Because… well, why not. Image Credits: Haje Kamps (opens in a new window) / MidJourney (opens in a new window)

The only real shortcoming of the algorithm is that it favors the “you’ll take what you’re given” approach. Of course, you can get a lot more detailed with your prompts to get a lot more control of the final image — both in terms of what’s going on in the image, the style and other parameters. If you are a visual director like myself, the algorithm is often frustrating because my creative vision is hard to capture in words, and even harder to interpret and render for the AI. But the scary thing (for artists) and the exciting thing (for non-artists) is that we are in the very infancy of this technology, and we’re going to get a lot more control over how images are generated.

For example, I tried the following prompt: Batman (on the left) and Dwight Schrute (on the right) are in a fistfight in a parking lot in Scranton, Pennsylvania. Dramatic lighting. Photo realistic. Monochrome. High detail. If I’d given that prompt to a human, I expect they’d tell me to sod off for talking to them as if they were a machine, but if they were to create a drawing, I suspect humans would be able to interpret that prompt in a way that makes conceptual sense. I gave it a whole bunch of tries, but there weren’t a lot of illustrations that made me think “yep, this is what I was looking for.”

[gallery ids="2363836,2363837,2363838,2363839,2363840"]

What about copyright?

There’s another interesting quirk here; a lot of the styles are recognizable, and some of the faces are recognizable, too. Take this one, for example, where I’m prompting the AI to imagine Batman as Hugh Laurie. I dunno about you, but I’m hella impressed; it’s got the style of Batman, and Laurie is recognizable in the drawing. What I don’t have any way of knowing, though, is whether the AI ripped off another artist wholesale, and I wouldn’t love to be MidJourney or TechCrunch in a court-room trying to explain how this went horribly wrong.

Hugh Laurie as Batman Image Credits: MidJourney with a prompt by Haje Kamps under a BY-NC-40 licence.

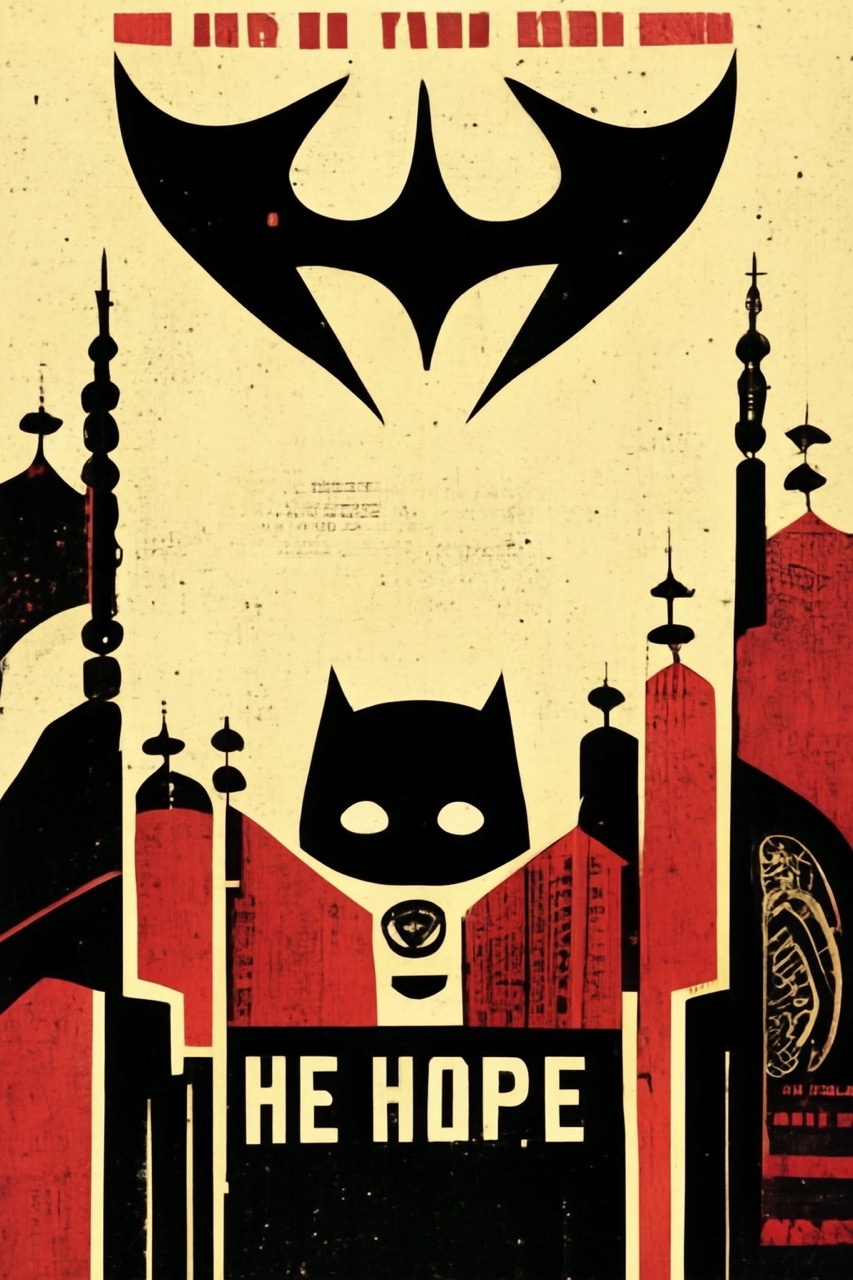

This sort of problem comes up in the art world more often than you’d think. One example is the Shepard Fairey case, where the artist allegedly based his famous Barack Obama “Hope” poster on a photograph from an AP freelance photographer, Mannie Garcia. The whole thing became a fantastic mess, especially when a bunch of other artists started creating art in the same style. Now, we have a multi-layered plagiarism sandwich, where Fairey is both allegedly plagiarizing someone else, and being plagiarized in turn. And, of course, it’s possible to generate AI-art in Fairey’s style, which complicates matters infinitely further. I couldn’t resist giving it a whirl: Batman in the style Shepard Fairey with the text HOPE at the bottom.

HE HOPE. A great example of how the AI can get close, but no cigar, with the specific vision I had for this image. And yet, the style is close enough to Fairey’s that it is recognizable Image Credits: Haje Kamps (opens in a new window) / MidJourney (opens in a new window)

Kyle has a lot more thoughts about where the legal future lies for this tech:

So where does that leave artists?

I think the scariest thing about this development is that we’ve very quickly gone from a world where creative exploits such as photography, painting and writing were safe from machines, to a world where that’s no longer as true as before. But, as with all technology, there’s very soon going to be a time when you can no longer trust your own eyes or ears; machines are going to learn and evolve at breakneck speed.

Of course, it’s not all doom and gloom; if I were a graphical artist, I’d start using the newest generation tools for inspiration. The number of times I’ve been surprised by how well something came out, and then thought to myself, “but I wish it was slightly more [insert creative vision here]” — if I had the graphic design skills, I could take what I have and turn it into something closer to my vision.

That may not be as common in the world of art, but in product design, these technologies have existed for a long time. For PCBs, machines have been creating first versions of trace design for many years — often to be tweaked by engineers, of course. The same is true for product design; as far back as five years ago, Autodesk was showing off its generative design prowess:

It’s a brave new world for every job (including my own — I had an AI write the bulk of a TechCrunch story last year) as neural networks get smarter and smarter, and more and more comprehensive datasets to work with.

Let me close out on this extremely disturbing image, where several of the people the AI placed in the image are recognizable to me and other members of the TechCrunch staff:

“A TechCrunch Disrupt staff group photo with confetti.” Image Credits: MidJourney with a prompt by Haje Kamps under a BY-NC-40 license

The MidJourney images used in this post are all licensed under Creative Commons non-commercial attribution licenses. Used with explicit permission from the MidJourney team.