AI is confusing. This tool helps you make sense of the tech

A new data visualization tool lets people in creative fields explore their ethical considerations around AI. The Creative AI Magnifier was developed by MANY, a design studio headed by Andrew Shea, who is also an associate professor of integrated design at The New School’s Parsons School of Design, which helped fund the tool’s development. Shea says the tool was inspired by a course he and colleague Jeongki Kim taught on AI, creativity, and social justice, which led him to explore the literature on the subject. He came to realize that in all the public discussions of ethics and AI, there wasn’t much geared toward the needs of the creative community, an issue which only grew more imperative as generative AI tools have emerged and gone viral. “I wasn’t finding anything that was really tailored to artists, and designers, and writers, and musicians, and other creative folks,” he says. “And so that’s when I decided there was an opportunity to put something together that would address that.” The Magnifier lets users indicate the degree to which they agree or disagree with particular statements about AI ethics, like that “AI-generated creative works that are based on the works of other creators violate intellectual property” or that “creators should specify when and how they use AI tools in their work.” It also asks a few survey questions about users’ creative practices. It’s designed to be more than just a poll, prompting Shea’s students and others who use it to begin to sort out their own feelings about AI’s place in creative fields and their own work. [Screenshot: Many] The process is estimated to take about four minutes, after which the tool generates a circular rose chart indicating the visitor’s weightings of the various statements. Users can also visit a gallery of past visualizations, presented anonymously except for the creator’s indicated discipline, such as “writing” or “ceramics.” The rose charts—essentially a variant of the pie chart that’s been used at least since Florence Nightingale created one to illustrate causes of British Army mortality in the Crimean War—were chosen as a simple and elegant way to document the user responses, Shea says. “I found some code that would allow me to quickly implement that in a way which I think is kind of artful,” he says. “And that’s kind of the approach of the website in general, to find ways to create the information in a digestible way that wouldn’t require somebody to read a thesis, or dissertation, or even a chapter of a book, but to be able to quickly understand how these issues might relate to them.” Shea and his colleagues are beginning to use the Magnifier in classes as a way to spark discussions, and the tool is accompanied by hypothetical scenarios related to the various topics it covers, along with discussion questions and suggestions for further reading. As more people use the Magnifier, their collective priorities may also come to guide the research Shea does around AI, creativity, and ethics, he says. The Magnifier is likely to continue to evolve as people use it, and Shea may tweak the set of prompts based on people’s interests and add features like letting people more readily export and share their visualizations. In the meantime, he’s hopeful that it will be useful in helping creative people–particularly students and those early in their career—in thinking about the AI tools that are becoming increasingly ubiquitous. “We know it’s happening,” Shea says. “We know it’s showing up in each and every one of our classes, whether people are telling us or not, and so we want to make sure that we’re at least acknowledging and helping people make decisions that are in line with their values and their ethos.”

A new data visualization tool lets people in creative fields explore their ethical considerations around AI.

The Creative AI Magnifier was developed by MANY, a design studio headed by Andrew Shea, who is also an associate professor of integrated design at The New School’s Parsons School of Design, which helped fund the tool’s development. Shea says the tool was inspired by a course he and colleague Jeongki Kim taught on AI, creativity, and social justice, which led him to explore the literature on the subject.

He came to realize that in all the public discussions of ethics and AI, there wasn’t much geared toward the needs of the creative community, an issue which only grew more imperative as generative AI tools have emerged and gone viral.

“I wasn’t finding anything that was really tailored to artists, and designers, and writers, and musicians, and other creative folks,” he says. “And so that’s when I decided there was an opportunity to put something together that would address that.”

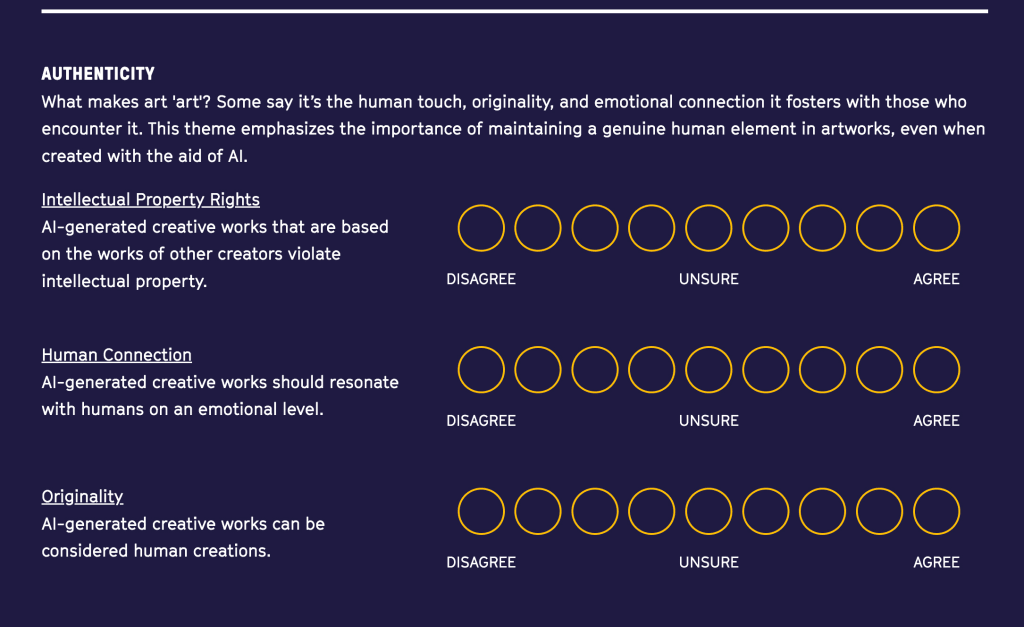

The Magnifier lets users indicate the degree to which they agree or disagree with particular statements about AI ethics, like that “AI-generated creative works that are based on the works of other creators violate intellectual property” or that “creators should specify when and how they use AI tools in their work.” It also asks a few survey questions about users’ creative practices. It’s designed to be more than just a poll, prompting Shea’s students and others who use it to begin to sort out their own feelings about AI’s place in creative fields and their own work.

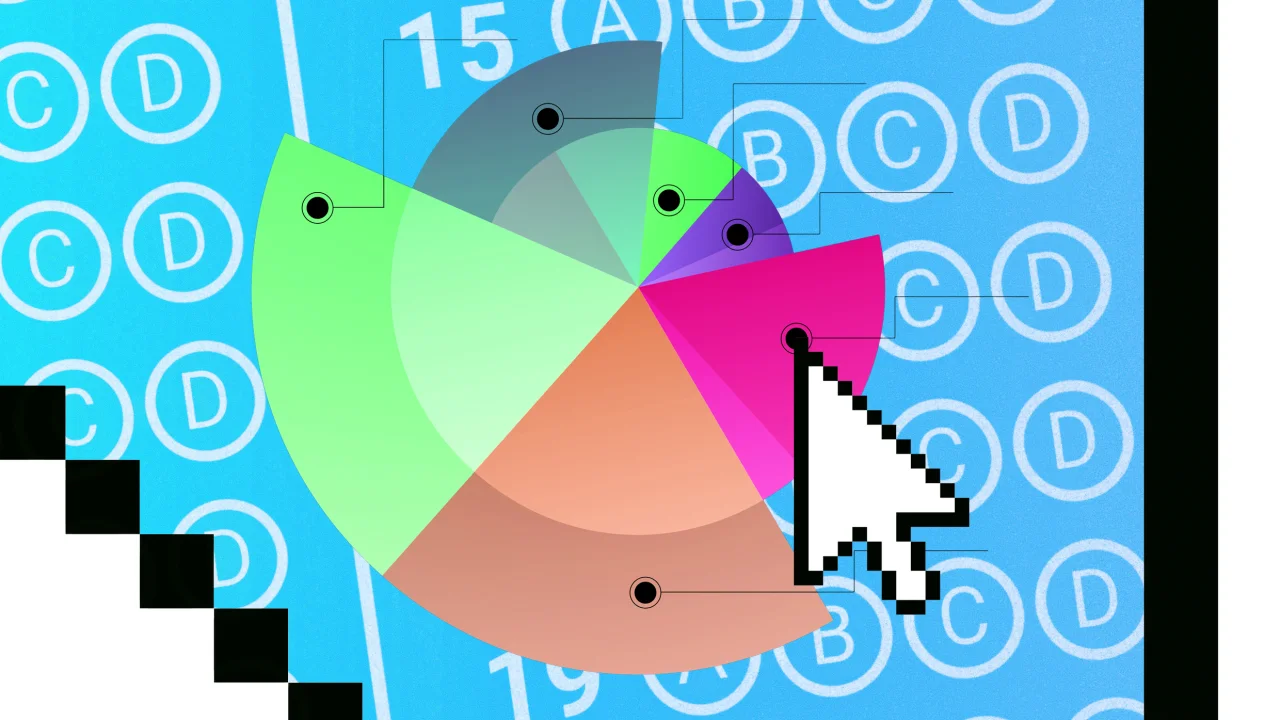

The process is estimated to take about four minutes, after which the tool generates a circular rose chart indicating the visitor’s weightings of the various statements. Users can also visit a gallery of past visualizations, presented anonymously except for the creator’s indicated discipline, such as “writing” or “ceramics.” The rose charts—essentially a variant of the pie chart that’s been used at least since Florence Nightingale created one to illustrate causes of British Army mortality in the Crimean War—were chosen as a simple and elegant way to document the user responses, Shea says.

“I found some code that would allow me to quickly implement that in a way which I think is kind of artful,” he says. “And that’s kind of the approach of the website in general, to find ways to create the information in a digestible way that wouldn’t require somebody to read a thesis, or dissertation, or even a chapter of a book, but to be able to quickly understand how these issues might relate to them.”

Shea and his colleagues are beginning to use the Magnifier in classes as a way to spark discussions, and the tool is accompanied by hypothetical scenarios related to the various topics it covers, along with discussion questions and suggestions for further reading. As more people use the Magnifier, their collective priorities may also come to guide the research Shea does around AI, creativity, and ethics, he says.

The Magnifier is likely to continue to evolve as people use it, and Shea may tweak the set of prompts based on people’s interests and add features like letting people more readily export and share their visualizations. In the meantime, he’s hopeful that it will be useful in helping creative people–particularly students and those early in their career—in thinking about the AI tools that are becoming increasingly ubiquitous.

“We know it’s happening,” Shea says. “We know it’s showing up in each and every one of our classes, whether people are telling us or not, and so we want to make sure that we’re at least acknowledging and helping people make decisions that are in line with their values and their ethos.”